The IREX Smart City Platform is designed in mind of emerging ethical standards in AI:

1. Bias awareness: All the existing face recognition algorithms (as well as a human brain) show different accuracy rates for different demographic groups of gender, age, and skin color. The bias is difficult to avoid due to natural variations in training datasets. IREX data scientists and AI engineers work continuously to reduce bias. At the same time, IREX participates in the NIST Face Recognition Vendor Test (FRVT) to provide an independent evaluation of the demographic effects.

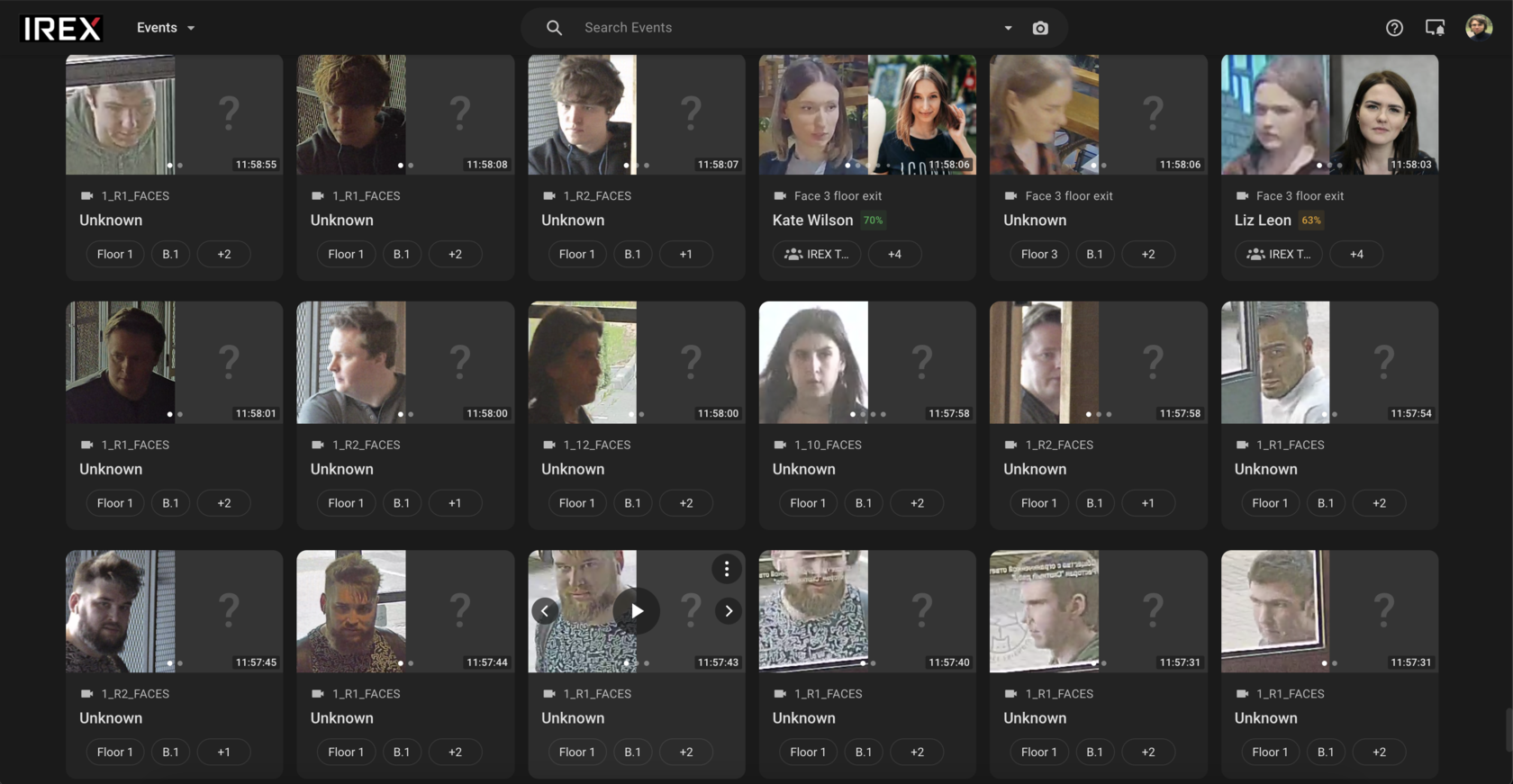

2. Narrow constraints for law enforcement: IREX detects pre-identified persons only to prevent a terror attack, find missing children or tackle other public safety threats. IREX restricts the number of persons that can be added to the database for real-time biometric identification. No recognition or tracking of random people. Learn more.

3. Permission-driven user interface: platform features, people databases, face match alerts, and search results can only be seen by authorized staff only. IREX users do not see face recognition data unless added to user groups with sufficient permissions.

4. Fully transparency: IREX records a detailed log about adding persons to the database and running a search query by photo. The log is fully searchable and auditable.

5. Cybersecurity and privacy protection: IREX has developed arguably the world's most secure platform for Smart Cities and upholds the best practices on daily basis. Learn more.

1. Bias awareness: All the existing face recognition algorithms (as well as a human brain) show different accuracy rates for different demographic groups of gender, age, and skin color. The bias is difficult to avoid due to natural variations in training datasets. IREX data scientists and AI engineers work continuously to reduce bias. At the same time, IREX participates in the NIST Face Recognition Vendor Test (FRVT) to provide an independent evaluation of the demographic effects.

2. Narrow constraints for law enforcement: IREX detects pre-identified persons only to prevent a terror attack, find missing children or tackle other public safety threats. IREX restricts the number of persons that can be added to the database for real-time biometric identification. No recognition or tracking of random people. Learn more.

3. Permission-driven user interface: platform features, people databases, face match alerts, and search results can only be seen by authorized staff only. IREX users do not see face recognition data unless added to user groups with sufficient permissions.

4. Fully transparency: IREX records a detailed log about adding persons to the database and running a search query by photo. The log is fully searchable and auditable.

5. Cybersecurity and privacy protection: IREX has developed arguably the world's most secure platform for Smart Cities and upholds the best practices on daily basis. Learn more.